Resources

That's Fresh! Newsletter

Read a selection of our past issues.

- Clearbox AI Goes Open Source – Meet Synthetic Kit! 🚀Our Open Source journey has begun!January 27, 2025

- Unwrap Our Latest News Before Christmas 🎁Catch our latest AI series and learn about groundbreaking tech developmentsDecember 18, 2024

- SURE, an open-source library for synthetic data evaluation🌎 Discover our open-source project!November 27, 2024

- 🙌 NumPy 2.0 is almost out!And: Our new data preprocessor with Polars | Interview with S2E at Italy Insurance ForumJune 5, 2024

- 😮 What a month for new LLMs!And: Datacamp webinar with ShaliniMay 22, 2024

- ✨ GenAI true value lies beyond operational enhancementsAnd: The Future of Data Protection | New updates about AI ActApril 24, 2024

- 👁 What are 1-bit Large Language Models?And: Linkedin Live about AI Act | Mastercard's Country Manager interviewed our CEOMarch 6, 2024

- LLaMAntino - Effective Text Generation in ItalianAnd: Creating train and test datasets | Use case: Detecting money muling with the help of synthetic dataFebruary 21, 2024

- 🗞️ The NY Times sues OpenAI and MicrosoftAnd: Can AI work with little data? | La Stampa: AI means developmentJanuary 10, 2024

- Synthetic Data 101 🚨And: Why synthetic data? | New project with Poste ItalianeNovember 8, 2023

- How easy is it for LLM to infer sensitive information?And: Why is data sharing important? | Our new partnership with S2EOctober 25, 2023

- Have you heard of Pythia?And: Data augmentation tutorial | Did you say AI apocalypse?August 30, 2023

- Google's answer to ChatGPTAnd: Generating synthetic data within relational databases. Let's meet at WAICF!February 8, 2023

- Understanding ChatGPT betterAnd: How to deal with imbalanced data. More about our productDecember 14, 2022

- A curated list of failed ML projectsAnd: How to build a data strategy. Clearbox AI and Bearing Point partnership.November 16, 2022

- Our open source library is now on GitHubAnd: Clearbox AI on Cybernews.June 22, 2022

- Discovering DagsterAnd: Quantifying privacy risks. Use case: a synthetic data sandbox to freely share data.June 8, 2022

- Can interaction data be fully anonymized?And: Synthetic Data for privacy preservation: understanding privacy risks. Discover our Enterprise solution.April 6, 2022

- What are GFlow nets?And: Improve models with Synthetic Data. Use case: augment financial time series.March 16, 2022

- The European Commission selected us for Women TechEU pilot project!And: What is Synthetic Data. The new Synthetic Data platform.March 09, 2022

- The EDPS on Synthetic DataAnd: From raw to good quality data. Changelogs: now you can upload unlabeled datasets.February 23, 2022

- 2022 Gartner’s Technology TrendsAnd: How to harness the power of AI in companies. Changelogs: new metrics available for your synthetic dataset.February 09, 2022

FROM THE AI WORLD

Have you heard of LlaMantino, the Italian version of Meta's LLM, Llama-2, developed by the University of Bari? This model was created to improve text generation and linguistic comprehension skills in Italian, a language that is greatly underrepresented in the training datasets used to train Llama models (considering that only 11% of the training data is in a language other than English).

To create LlaMantino, researchers adopted two strategies: the use of the language adaptation technique QLoRA, which allows for the transfer of linguistic knowledge from general pre-trained models (like Llama) to models for a specific language, and the enrichment of the training dataset with Italian texts. Thanks to these techniques and the use of the Leonardo HPC supercomputer, several models of LlaMantino have been developed, now available on Hugging Face.

I believe that the LlaMantino project represents an exciting step forward in adapting pre-trained language models for the Italian context. This not only provides more proficient models in this language but also opens up new opportunities for understanding the mechanisms of language learning, sparking interest in the potential adaptation of this knowledge to other languages and linguistic contexts.

Italian text generation

The developers of LLaMAntino explored various tuning approaches to ensure a high-quality text generated in Italian suitable for common tasks.

CLEARBOX AI

Detecting money muling

In this use case, our synthetic data generation improved money muling detection models performances, often characterized by high data imbalance.

BLOG POST

Creating train and test datasets

This step lays the groundwork for training models effectively, ensuring they can learn from one set of data and then be evaluated on a separate, unseen one.

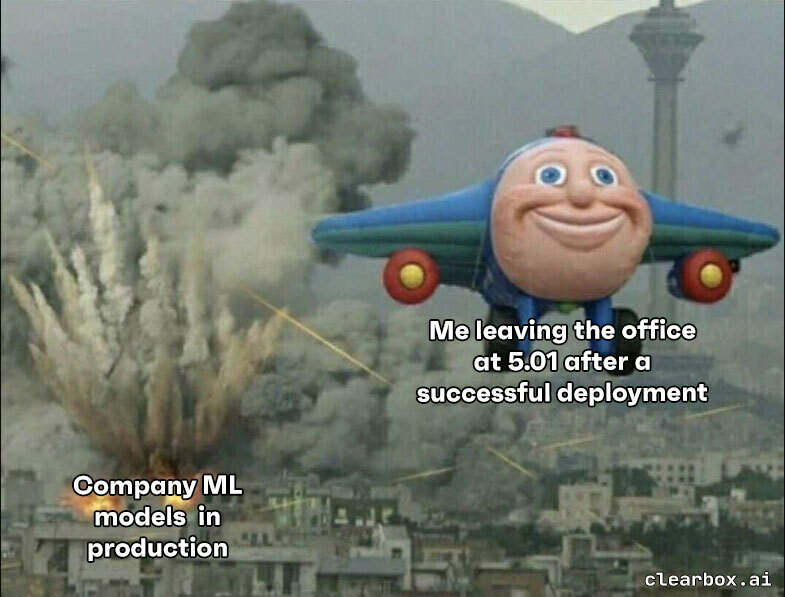

WEEKLY MEME

Your pals may also like...