Introduction

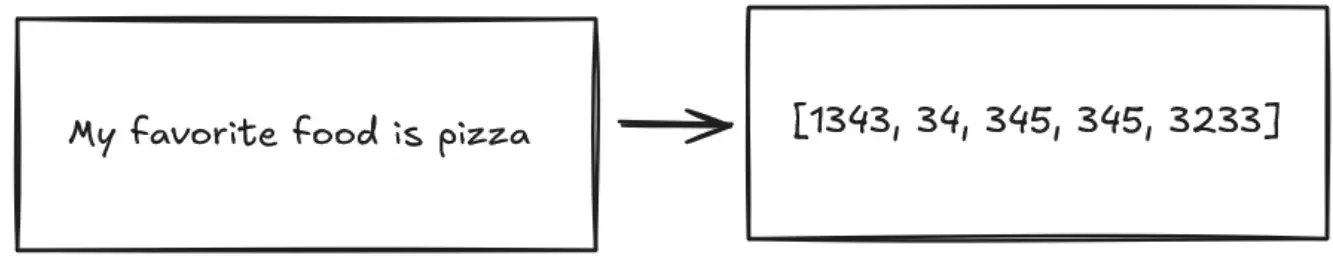

In this blog post we will talk about one of the most important parts in a Large Language Model, tokenizers. When we think of large language models like GPT or Gemini, our minds often jump straight to their capabilities: writing essays, answering questions, generating code. But behind the scenes, one of the most critical and often overlooked components is the tokenizer. Before an LLM can understand or generate text, it first needs to convert that text into a numerical format it can work with. This transformation happens through tokenization, a process that breaks down human language into manageable chunks for the machine called tokens.

At a high level, tokenizers are tools that break down raw text into smaller pieces, called tokens, which can be processed by machine learning models. This step is crucial because models do not operate directly on raw text, they operate on numerical representations of these tokens. Tokenization allows language models to:

- Handle input text efficiently,

- Learn patterns within text at an appropriate level of granularity,

- Manage vocabulary size and model complexity.

It's important to note that tokenization is always performed before training a language model. It is an independent preprocessing step, meaning tokenizers are typically built, trained, and finalized separately from the language models themselves. Once the tokenizer is established, it converts all input text into token sequences, creating a consistent and structured representation that the model can learn from during training and later use during inference.

Common tokenization schemes

There are different ways we can break down a sentence into smaller pieces. We could do this by breaking a sentence at the character level, the word level, or the subword level. Character-level tokenization treats each individual character as a separate token. Its main benefits lie in its simplicity, universal applicability across languages, and small vocabulary size. However, this method creates very long token sequences, which can make it challenging for language models to capture meaningful dependencies and patterns, resulting in inefficient processing and potentially diminished performance. Word-level tokenization, on the other hand, assigns each entire word as a distinct token. This approach yields shorter, more human-readable sequences that align closely with natural language structures. Nevertheless, it often leads to a large vocabulary size, which is difficult to manage, especially when encountering rare, new, or unseen words. This limitation can negatively impact a model's flexibility and generalization capabilities. Subword-level tokenization represents a balance between the previous two techniques. It breaks words into smaller, meaningful subword units, effectively managing vocabulary size and sequence length. This method also copes better with out-of-vocabulary words, allowing language models to generalize more effectively. The trade-off, however, is that subword tokenization is more complex to implement and produces tokens that may not be intuitive or easily interpretable for humans.

For example, take the word "going". In a character-level scheme, it would be split into individual tokens like "g", "o", "i", "n", "g". A word-level tokenizer would treat "going" as a single token. But with subword-level tokenization, it might be broken into "go" and "ing". This allows the tokenizer to efficiently represent both common stems and suffixes, so it can handle variations like "goes", "gone", or "goer" without needing to store each form individually in the vocabulary.

Byte Pair Encoding tokenizers

Subword tokenizers are arguably the most used by LLMs. In particular an extremely popular algorithm is the Byte Pair Encoding (BPE) It merges frequent pairs of characters or subwords iteratively until a desired vocabulary size is reached. The algorithm works as follows:

Inputs:

D: A set of strings (usually a text corpus).k: Target vocabulary size (desired number of tokens).

Procedure BPE(D, k):

- Initialize the vocabulary

Vwith all unique characters inD.- Example: If

D = {"hello", "world"},Vmight start as{h, e, l, o, w, r, d}.

- Example: If

- Repeat while the vocabulary size

|V|is less than the targetk:- Find the most frequent adjacent pair of tokens (called a "bigram") in the dataset

D. Denote it as(t_L, t_R). - Create a new token by merging the pair:

t_NEW = t_L + t_R. - Add the new token to the vocabulary:

V = V ∪ {t_NEW}. - Update the dataset by replacing all instances of the pair

(t_L, t_R)with the new tokent_NEW.

- Find the most frequent adjacent pair of tokens (called a "bigram") in the dataset

- Once the target vocabulary size is reached (

|V| = k), returnV.

Byte Pair Encoding (BPE) is among the most widely adopted tokenization methods used in large language models today. For instance, GPT-4 employs a variant of the BPE algorithm. To gain an intuitive understanding of tokenization, you can explore this interactive tool: TikTokenizer. Experiment by entering different text samples and observe how the text is segmented into subword tokens based on their frequency and occurrence patterns.

It's important to note that different language models choose distinct tokenization strategies and vocabulary sizes depending on their intended use cases and architectural designs. Vocabulary size is especially critical, influencing factors such as model performance, training efficiency, and memory utilization.

The following table compares vocabulary sizes of popular language models:

| Model | Vocabulary Size |

|---|---|

| GPT-2 | 50,257 |

| GPT-3.5 / GPT-4 | ~100,000 |

| LLaMA 2 | ~32,000 |

| DeepSeek R1 | 131,072 |

Popular tokenizer libraries

TikTokenizer

If you’re looking for a ready-to-use, efficient tokenizer optimized specifically for inference, TikToken is an excellent choice. It's extensively utilized in OpenAI’s models, including GPT-3.5 and GPT-4, offering high performance suitable for production environments. Its design focuses on speed, accuracy, and ease of use.

Hugging Face Tokenizers

For those seeking greater flexibility or wanting to build custom tokenization pipelines, Hugging Face’s tokenizers library is widely acclaimed. It supports various tokenization algorithms such as Byte Pair Encoding (BPE), WordPiece, and Unigram, enabling you to train custom tokenizers from scratch. Its integration with the broader Hugging Face ecosystem makes it ideal for research, experimentation, and production deployments.

SentencePiece

SentencePiece by Google is another popular tokenizer library designed for multilingual and unsupervised tokenization tasks. It is language-agnostic, supports various subword tokenization methods, and simplifies preprocessing by handling text normalization internally, making it especially beneficial for multilingual models.

Alternatives to Tokenization

Alternative approaches to standard tokenization algorithms are increasingly being explored as a promising avenue to enhance the capabilities of large language models (LLMs). These innovative strategies aim to address limitations associated with traditional tokenization, such as rigid vocabulary constraints, reduced scalability, and inefficiencies in handling multilingual or complex textual data.

A notable recent advancement is presented in Meta’s research paper, "Patches Are All You Need? Patches Scale Better Than Tokens" (link). This approach departs significantly from conventional discrete token methods by employing continuous byte-level latent representations, often termed "patches." Unlike fixed discrete vocabularies, these continuous representations provide a more flexible and scalable method for processing textual data, enabling the model to dynamically capture nuanced patterns and relationships within the input.

By leveraging continuous byte-level latent spaces, Meta’s Byte Latent Transformer offers potential benefits including enhanced scalability, increased computational efficiency, and reduced preprocessing complexities. This shift towards token-free or token-light models opens exciting research directions, potentially paving the way for more adaptive, efficient, and universally applicable language models.

Tags:

blogpost Luca Gilli, PhD, is CTO and co-founder of Clearbox AI, where he leads R&D and product development. Expert in generative AI, uncertainty quantification, and ML model validation, he is the inventor of Clearbox AI’s core synthetic data technology.

Luca Gilli, PhD, is CTO and co-founder of Clearbox AI, where he leads R&D and product development. Expert in generative AI, uncertainty quantification, and ML model validation, he is the inventor of Clearbox AI’s core synthetic data technology.