A few weeks ago, I had the pleasure of speaking at TEDx Barletta, set in the beautiful and historic Barletta Castle. The conference's theme was "Noise", and I was invited to isolate the signal from the noise surrounding Artificial Intelligence and its implications.

Will AI replace humans? Will it steal our jobs? What if AI systems will take over the world?

My talk delved into separating AI fact from fiction and highlighted the real risks of this technology and how to mitigate them.

Are we really experiencing an AI apocalypse?

In 1947, a group of atomic scientists, including Albert Einstein, started the symbolic doomsday clock to indicate how close we are to a human-induced global catastrophe because of nuclear war and, more recently, climate change. We are currently 90 seconds to midnight, the closest we have ever been to a catastrophe.

A couple of months ago, some of the biggest names in AI signed a short yet unsettling doomsday statement on AI . It reads. “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

These risks of extinction are wide-ranging, and the hypotheses are endless, not too different from the plots of famous science fiction movies like Terminator or Ex-Machina as we’ve all seen. AI systems could also be used to discover new viruses and chemicals to create weapons of mass destruction, or they can go about cracking open nuclear codes in the hands of malicious actors could cause complete annihilation.

How does one feel about that? Anxious, frustrated, helpless, uncomfortable? While one has every right to feel that way, the fears may be there for the wrong reasons. While no one wants these apocalyptic futures to become a reality, these sensational predictions create noise and confusion. They shift the focus from the actual AI risks we must be worried about, which are also happening today. Unlike nuclear technology, AI is everywhere that we don't even actively consider its presence. AI makes our lives very convenient. Think about movie recommendations on Netflix, suggestions to follow a group or a person on social media, turning on the lights with your voice command, or generating an image with a textual prompt.

At the end of the day, Artificial Intelligence is a powerful combination of data and algorithms. These AI algorithms are data-hungry. They require massive amounts of data to train themselves to do their intended job. And if they get bad data, the results are poor, too. Garbage in, garbage out.

What are the risks associated with AI?

Data Privacy

One of the biggest risks we somewhat know already is that of data privacy. The consequences can range from awkward conversations with a friend who received your kitchen gossip to the social features of your fitness app could compromise the security of military assets. Or worse, it could weaken the credibility of democratic election processes by manipulating voter behavior by playing on their emotions and deepest fears.

Bias and stereotypes

The other big risk comes from bias and stereotypes. Take my story, for example. I was born and raised in India but spent almost all my adult life mainly in Europe, and when people approach me, they already have a preconceived notion about me. They think I speak “Indian” and that I might be an IT professional- in fact, when I started a role in Sweden, I was referred to as “that Indian girl that doesn't work for IT” and that I must know yoga. Most of the time, these are harmless assumptions where I go on to say “Indian" is not a language, I'm not an IT professional, and last, one is kinda true, I do know some yoga :)

Stereotypes simplify the world around us to help us estimate a new person's or group's preferences and behavior. And how we make assumptions is influenced by our upbringing, the society around us, the movies we see, and whatever else we are exposed to.

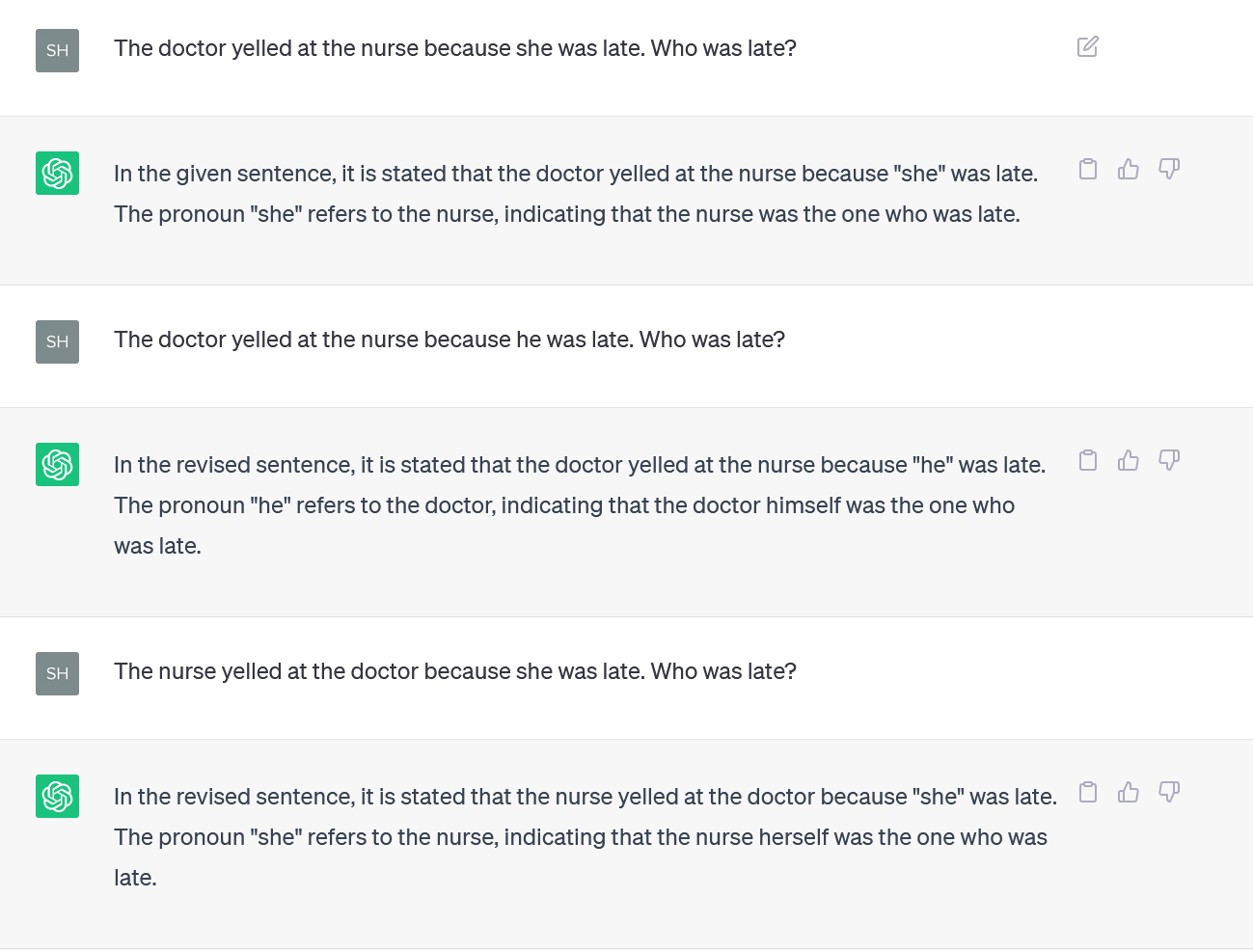

Close your eyes and imagine a CEO or nurse. It’s more likely than not that you have imagined a male CEO and a female nurse. Now, it doesn’t feel so harmless in terms of progress, right? But we can say we are humans; we are not objective, but we are not rational; we’ll do better next time.

sssWhat if all our stereotypes are systematically programmed into the AI we are developing and using, and somehow, we attribute rationality to them? You guessed right. DALL-E also thinks a CEO can only be a man and a nurse only a woman. And not just in images but even in textual outputs.

When I tried to ask ChatGPT some questions involving a doctor and nurse, it always assumed that the nurse was a “she,” even with the same sentence construction. These biases and stereotypes in AI can cause real harm.

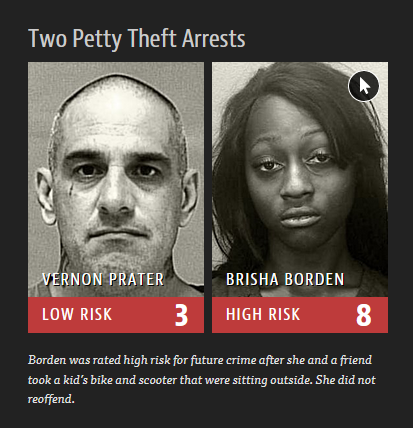

A predictive policing algorithm once used in the US categorized a black woman with a high risk of re-offence compared to a white man, even though he had more serious criminal charges.

Incomplete or non-representative data

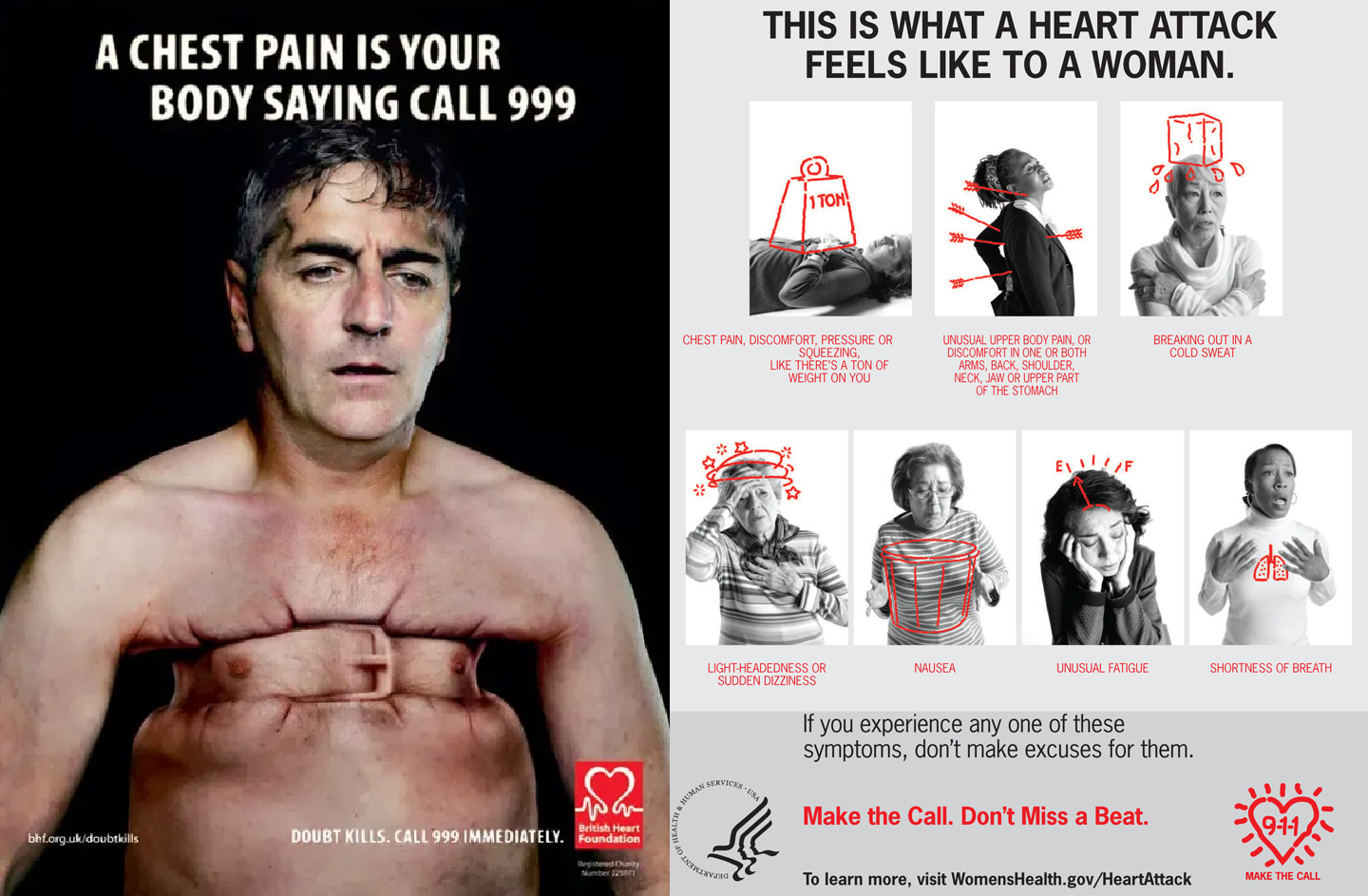

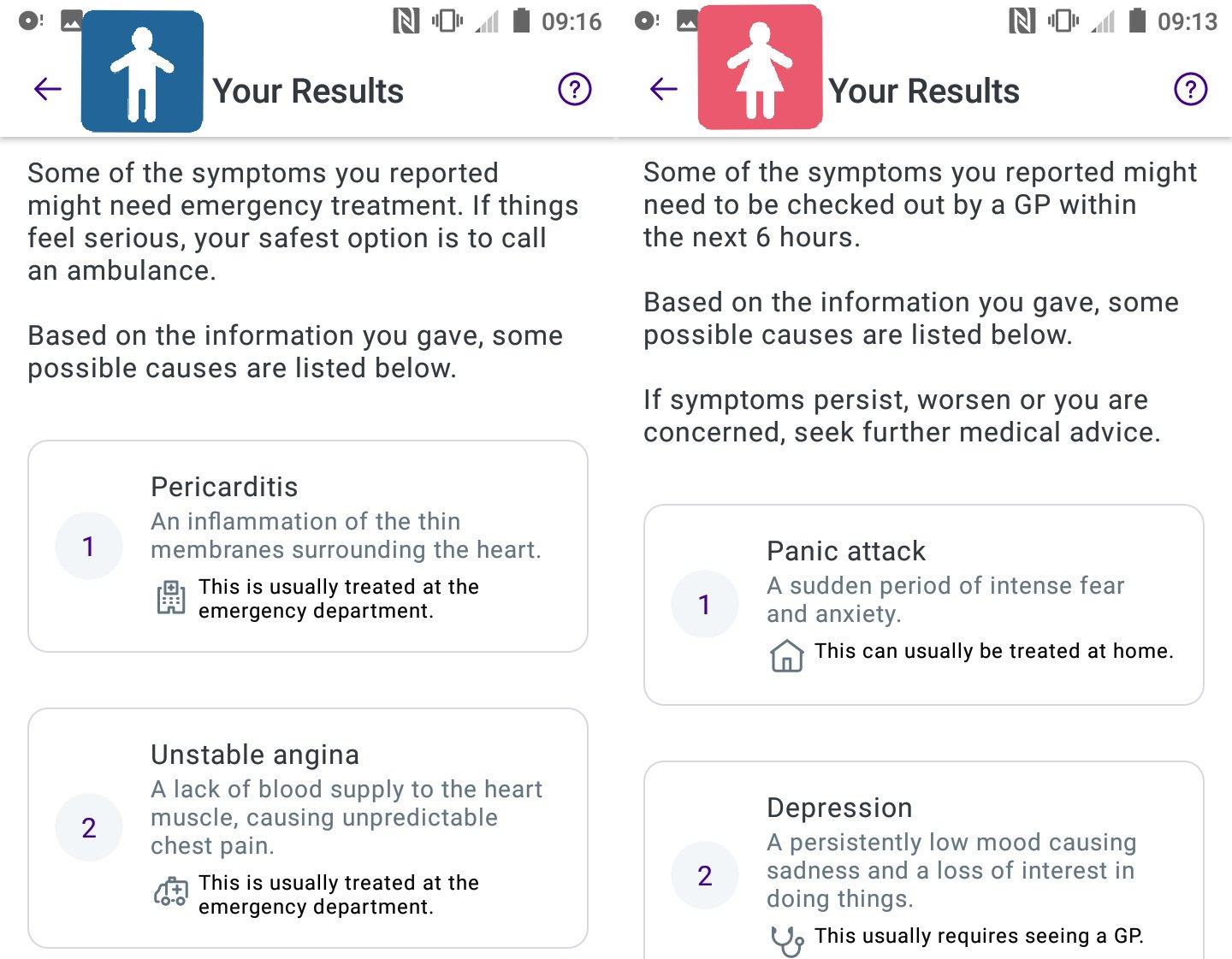

Let’s start with an example. Men and women have different symptoms of heart attack. For men, it can be constricting chest pain, and for a woman, maybe back pain.

Historically, most of the medical research was done on men, so there is more data, and more complete data on men's ailments than women's. So, when a company builds an AI-powered app to recognize symptoms of a heart attack, this app correctly recognizes a man’s symptoms as a heart attack and directs them to rush to the hospital, while for a woman, it says, “Calm down, it is a panic attack". The data gap can literally cost lives.

Non-inclusive and non-equitable outcomes

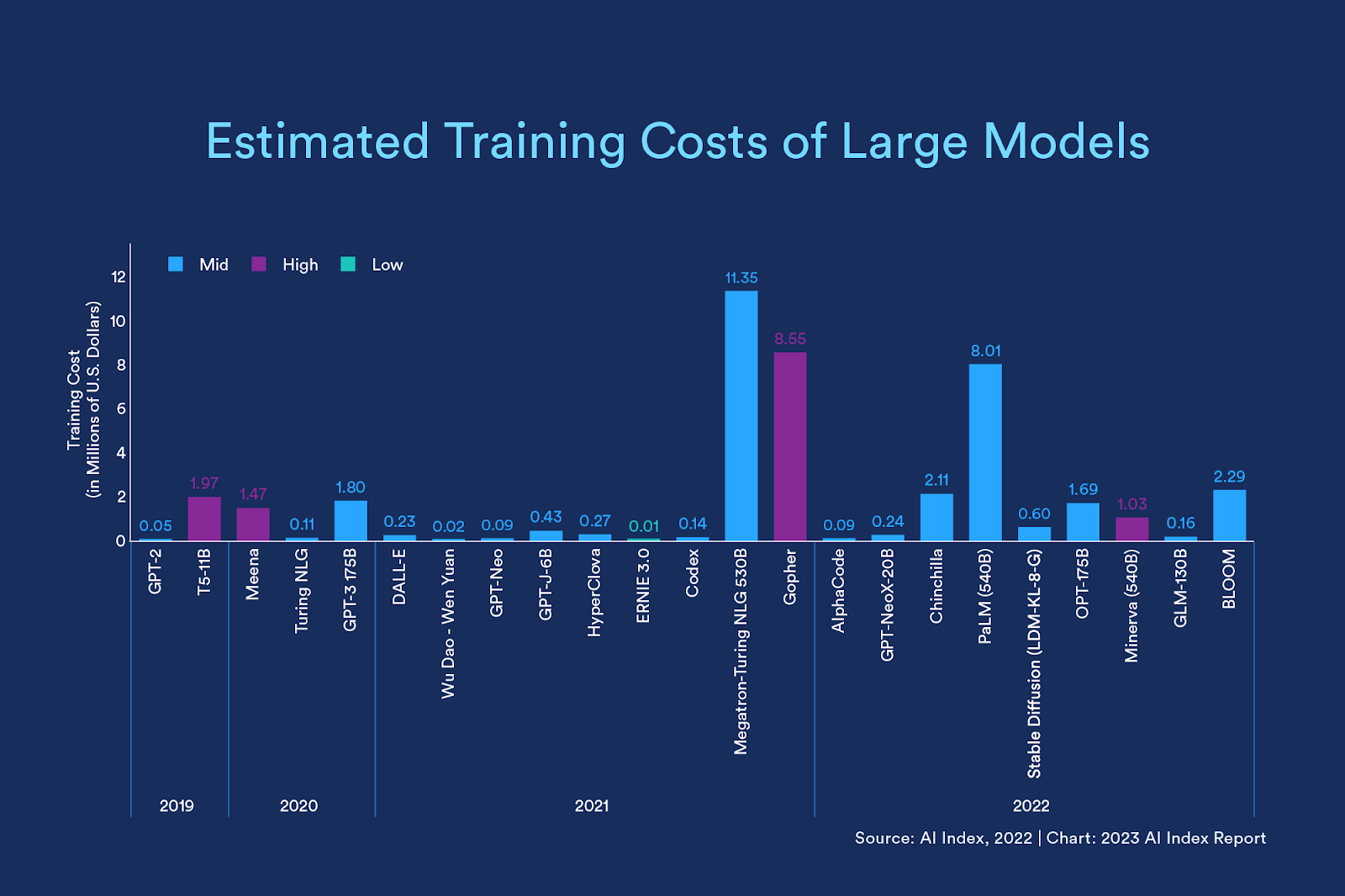

Who actually benefits from all this progress? ChatGPT doesn’t work so well in many non-English languages because there’s not enough data available, so it will negatively impact not only economies but also cultures. Not to mention the high costs of training these large AI models, ranging from hundreds of thousands to millions of dollars, and the enormous environmental impact of their computational resources usage.

The future and present of AI

These are the risks that we should really focus on because they are very much real and current compared to the hypothetical future apocalyptic scenarios. We could actually use AI for many glorious hypothetical futures where AI is a brilliant collaborator to fight against the grand and wicked problems we face as humans. For every apocalyptic scenario that we started this talk with, there could be a utopian scenario. Take drug discovery; discovering and designing new medicines for diseases takes more than ten years! With AI, this time could be shortened to less than a month. All the cost and time savings will increase possibilities for the search of medicines for rare and neglected diseases and will open a new frontier for personalized medicine.

The United Nations has big hopes for AI to help their peacekeeping efforts by predicting conflict, facilitating rescue operations, translation, and local situation analysis to better engage with relevant stakeholders. Experts believe that AI is an indispensable tool in our fight against climate change, whether to make sense of complex climate phenomena or to make predictions based on the ginormous climate datasets and variables, as well as to assist in designing climate-friendly materials. And, of course, the big prize is the economic rewards due to AI adoption. PwC predicts that AI adoption could contribute up to 15.7 trillion dollars to the global economy by 2023, with America, Europe, and China emerging as the biggest beneficiaries of this progress.

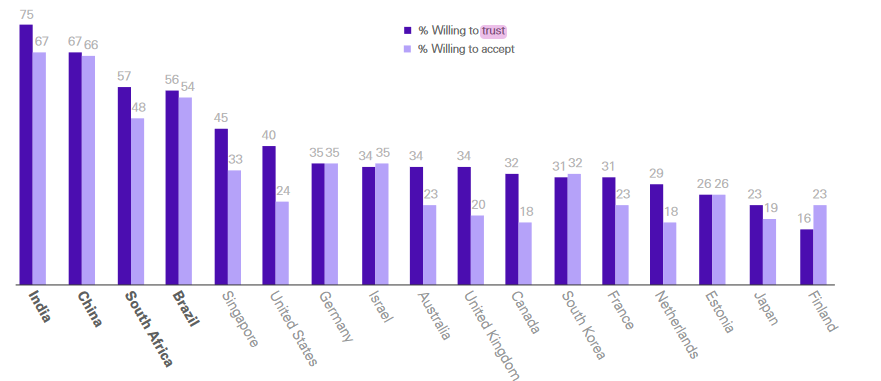

Naturally, all countries want a piece of this cake of progress, so the attitudes towards AI and risks are very different. Emerging economies like India, Brazil, and South Africa are all ready to embrace AI enthusiastically and are most likely to trust its outcomes compared to Western economies.

How to avoid the AI apocalypse?

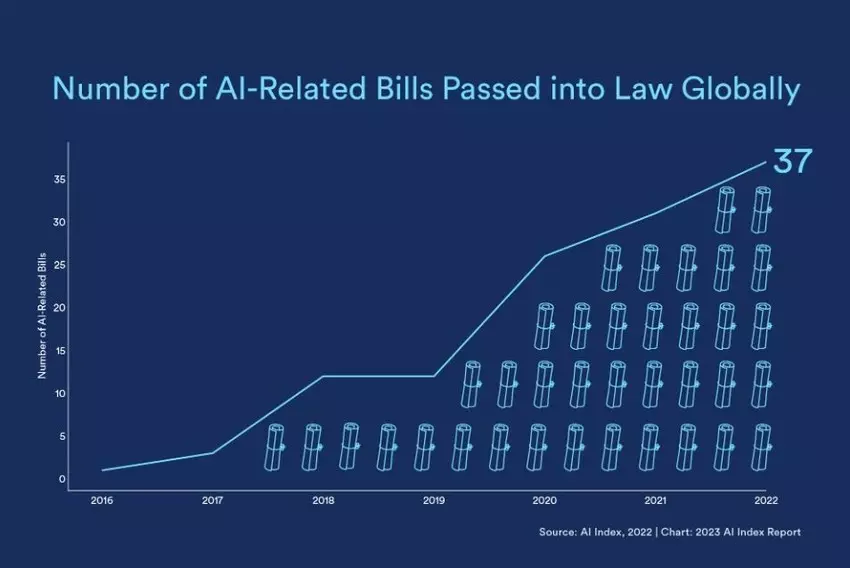

So there is really no stopping AI, but if we want to avoid an AI apocalypse and achieve this enthusiastic AI utopia, we must set up guardrails. Fortunately, there are some early efforts in this direction. The EU has approved a first draft of regulation for AI technologies, and the number of bills to regulate AI is steadily increasing worldwide. Regulation will always be complex for these rapidly evolving technologies, but if we don’t start somewhere, we won't know how.

And, of course, the most important thing we need to do is to cut through all the noise of the sensational predictions and truly understand the real risks and benefits of this double-edged sword that is AI. We need better data- I can't stress this enough- better quality, representative, privacy-friendly, and complete data. We need robust models with mechanisms to evaluate and test their performance and safety. And we need a coordinated global agreement and regulations, just like we have for nuclear and climate change, because AI will impact everyone on Earth.

And lastly, I want to conclude with my favorite quote from Noam Chomsky: “We have two choices. We can be pessimistic, give up, and help ensure that the worst will happen. Or we can be optimistic, grasp the opportunities that surely exist, and maybe help make the world a better place. Not much of a choice.” ― Noam Chomsky's Optimism over despair

Tags:

blogpost Shalini Kurapati, PhD, is an expert in data governance, privacy, and responsible AI. As co-founder and CEO of Clearbox AI, she focuses on building transparent and compliant data solutions.

Shalini Kurapati, PhD, is an expert in data governance, privacy, and responsible AI. As co-founder and CEO of Clearbox AI, she focuses on building transparent and compliant data solutions.